Summary: Let’s delve into the process of fine-tuning GPT-3, a state-of-the-art language model. Understand the model thoroughly, work with datasets, and customize it to suit your needs making the most out of GPT-3.

“With GPT-3, businesses can tap into the AI’s potential and make smarter decisions, leading to great success.”

Have you ever wondered how with almost no human involvement, chatbots can comprehend and reply to client queries/questions? Or, how do virtual assistants like Apple Siri and Google Assistants swiftly understand and respond to human language?

The answer lies in the integration of GPT-enabled natural language processing (NLP) and state-of-the-art language models. GPT-3, a large language model, was created by OpenAI, the research laboratory.

Let’s explore the art of fine-tuning the model, to make it perform as desired. Remember, fine-tuning the language model can reduce the frequency of generating unreliable outputs from 17% to 5%.

So, without any further delay, let’s learn how to train GPT-3, unlocking the vast potential it offers to enhance AI-powered solutions.

Table of Content

What are Language Models (LLMs)?

Large language models are artificial-aided intelligence tools that are trained on massive amounts of knowledge-based data. This allows them to learn complex patterns and relationships between words and phrases in the language. The generic goal of larger language models is to perform probability distribution of the next word(s) in a sequence of words.

It is to be noted that before GPT-3, specific models used machine learning algorithms to conduct specific tasks. However, if we talk about training AI algorithms for GPT-3, which already has 170+ billion parameters, it requires huge datasets.

The understanding of the datasets or where the data is retrieved to train GPT-3 will be discussed later in this blog. The common application areas of the LLMs and natural language processing are:

- Spam detection

- Machine translation

- Text summarization

- Virtual assistants

- Speech recognition

- Sentiment classification

- Chatbots

What is GPT-3?

Generative pre-trained transformer 3 (GPT-3) has amazed the data science industry with massive trainable parameters. This AI model has been trained on massive datasets. It directly means that the model has been exposed to a vast range of data, including books, articles, websites, and audio-video visuals.

Data scientists all over the world consider GPT-3, as the largest language model. The word “largest” here is used because the model has been trained on the vast dataset of 175 billion parameters and 499 billion tokens.

- It has a neural network machine learning model that takes input text to understand the intent and, thereby, generates natural human language text.

- It uses both natural language generation and natural language processing to probabilistically determine what tokens from a known vocabulary will come next.

GPT-3, powered by Open AI, is a successor of their previous language model, GPT-2. This was launched in May 2020. GPT-3 is more formatted and has succeeded in providing superior performance in comparison to GPT-2. With a staggering 175 billion parameters, the full version of OpenAI’s GPT-3 is the largest language model over other language models.

Top Highlights of GPT-3: Key Takeaways

- The GPT-3’s performance increased exponentially as and when the model size, dataset size, and the amount of computation rises.

- GPT-3 can be a general solution for performing various natural language processing tasks, even without fine-tuning the model.

- GPT-3 uses a single model to perform a wide variety of downstream tasks with a high level of accuracy.

- GPT-3 is capable enough to perform various NLP tasks like text generation, summarisation, text classification, question-answering, translation, etc.

- The costs of best-performing language models are increasing at an unprecedented rate of at least 10 every year. This outpaces the growth of GPU’s memory.

- Resultantly, days are not far when embarrassingly parallel or traditional parallel processing methods will come to an end, and model parallelism will become indispensable.

GPT – 3 Model Architecture

GPT-3 is a successor to the GPT-2 model, an auto-regressive transformer (decoder) that uses the same tokenization approaches as used in GPT-2.

The main differences between GPT-2 and GPT-3 are

- Each model has additional decoder layers, and the dataset is extensive.

- The model’s layers are all parallelized, and matrix multiplication is included.

- Using a sparse transformer (like attention matrix factorization) to control memory.

There are basically eight different-size models of GPT-3, ranging from 125M to 175B. The previous record-holder, T5-11B, is successfully outperformed by the largest GPT-3 model. The size of the smallest GPT-3 model and the BERT-Base and RoBERTa-Base are roughly equivalent.

Let’s get started understanding the basics of these models:

- All GPT-3 models employ the same attention-based architecture as the GPT-2 generation.

- The smallest GPT-3 model with 125M includes 12x 64-dimension heads for each of the 12 attention levels.

- The largest GPT-3 model with 175B includes 96 attention layers, each of which heads for 96 x 128.

- It was stated that all of these models were trained on V100 GPUs with high bandwidth provided by Microsoft using the same amount of tokens (300 billion tokens).

| Model Name | Parameters | Layers | nheads | dheads | Batch Size | Learning Rate |

|---|---|---|---|---|---|---|

| GPT-3 Small | 125M | 12 | 12 | 64 | 0.5M | 6.0*10-4 |

| GPT-3 Medium | 350M | 24 | 16 | 64 | 0.5M | 3.0*10-4 |

| GPT-3 Large | 760M | 24 | 16 | 96 | 0.5M | 2.5*10-4 |

| GPT-3 XL | 1.3B | 24 | 24 | 128 | 1M | 2.0*10 |

| GPT-3 2.7B | 2.7B | 32 | 32 | 80 | 1M | 1.6*10-4 |

| GPT-3 6.7B | 6.7B | 32 | 32 | 118 | 2M | 1.2*10-4 |

| GPT-3 13B | 13.0B | 40 | 40 | 128 | 2M | 1.0*10-4 |

| GPT-3 175B | 175.0B | 96 | 96 | 128 | 3.2M | 0.6*10-4 |

After delving into the GPT-3 AI model, explore different types of AI models as well. These include supervised learning models, unsupervised learning models, reinforcement learning models, and deep learning models.

Understanding the Basics of GPT-3 Training Data

The size of the dataset must scale proportionally to the size of the model because neural networks are essentially compressed representations of the training data. Since the GPT 3 has 175 billion parameters with 499 tokens, here is the breakdown of the data retrieved:

| Dataset | Tokens |

|---|---|

| Common Crawl | 410 |

| WebText2 | 19 |

| Book1 | 12 |

| Book2 | 55 |

| Wikipedia | 3 |

A large chunk of the data is gathered via a common crawl corpus that includes text extracts, metadata, and raw web page data. To prevent any duplication, some filtering methods are utilized on top of this data at the document level. Together with the enhanced WebText collection, a high-quality curated dataset is also integrated.

Engaging Read: Go through this real-life scenario that illustrates the fine-tuning process of the GPT-3 model.

Training the Model

In order to train the GPT-3 model, a process similar to the one followed in training its predecessor, GPT-2 is used. Here the model predicts the next word in a sentence. Depending on the model’s size, they adjust the batch size (amount of data processed at once) and learning rate (how quickly the model learns).

For instance, GPT-3 with 125 million parameters uses a batch size of 0.5 million and a learning rate of 6.0×10^-4, while GPT-3 with 175 billion parameters uses a batch size of 3.2 million and a learning rate of 0.6×10^-4.

The massive 175 billion-parameter GPT-3 model required an enormous amount of computing power, equivalent to 3.14 x 10^23 floating-point operations (FLOPS) for training. Even with powerful GPUs, it would take hundreds of GPU years and millions of dollars to run the training process.

Did you know?

To train the same-size language model as GPT-3, anyone would require 355 years, costing $4.6M, even with the lowest-priced GPU cloud on the market.

Moreover, the model’s size, with 175 billion parameters, far exceeds the memory capacity of a single GPU. To overcome this, OpenAI uses a combination of techniques called model parallelism instead of the traditional one, namely embarrassingly parallel.

Running Inference

Unlike other language models like BERT or transformerXL, which require additional adjustments for getting multiple tasks performed, GPT-3 can handle all downstream tasks with one single model.

For instance, to use BERT for sentiment classification or QA, one needs to incorporate additional layers that run on top of the sentence encodings. Another simple example could be, when building a self-driving car, you have to add specialized sensors on top of the vehicle’s framework.

This is not the case with GPT-3. It’s a versatile tool that can be used for many language tasks without making big changes. Last year, OpenAI already showed GPT-2’s potential as a turn-key solution for a range of downstream NLP tasks without even fine-tuning.

On top of it, the new generation, GPT-3, is a more formatted approach for running inference and demonstrates superior performance. It works in a specific way. You can give it no examples, just one example, or a few examples related to the task you want it to do. Then, you provide a prompt (like a question or instruction), and GPT-3 uses all this information to figure out what to say, step by step. It’s like teaching it a little bit and letting it do the rest.

We will further understand this with an example given below. Conclusively, GPT-3 is like a multi-talented model that can handle different language jobs with a bit of guidance.

Expected Outcomes

When it comes to analyzing the GPT-3’s performance, it’s a must to see how fairly it deals with the task/question or query asked. Let’s delve into the outcomes and capabilities of this language model:

- Text Generation:

GPT-3 truly outperforms in text generation ability. It possesses the remarkable ability to generate human-like quality content. As and when you provide GPT-3 with a title, subtitle, and a simple prompt like “Article:” GPT-3 can craft short articles, roughly 200-400 words in length, that often fool readers into believing they were written by a human. You can even further ask it to increase or decrease the length of the result provided.

Did you know?

OpenAI’s user study revealed that distinguishing between articles generated by the massive GPT-3 model with 175 billion parameters and those composed by humans is challenging, with human accuracy hovering around 52%.

In contrast, the smallest GPT-3 model, with 125 million parameters, achieved a higher human detection accuracy of 76%. This underscores a critical point: increasing the model’s size significantly enhances its text generation capabilities.

- General NLP Tasks:

Apart from generating human-like responses, the wow feature offered by GPT models is the ability to get re-programmed without any additional fine-tuning.

GPT-3 is capable of performing dozens of downstream tasks, ranging from the usual players such as machine translation and question and answer to unexpected new tasks such as arithmetic computation and one-shot learning of novel words.

Let’s Test the GPT-3 Model

Here is a practical example of how GPT-3 responds to the given task or assignment. Let’s practical test the model and its response generation capabilities. The main intent here is to showcase how GPT-3 performs in response to specific tasks or prompts, including an explanation of various techniques such as zero-shot, one-shot, and few-shot learning.

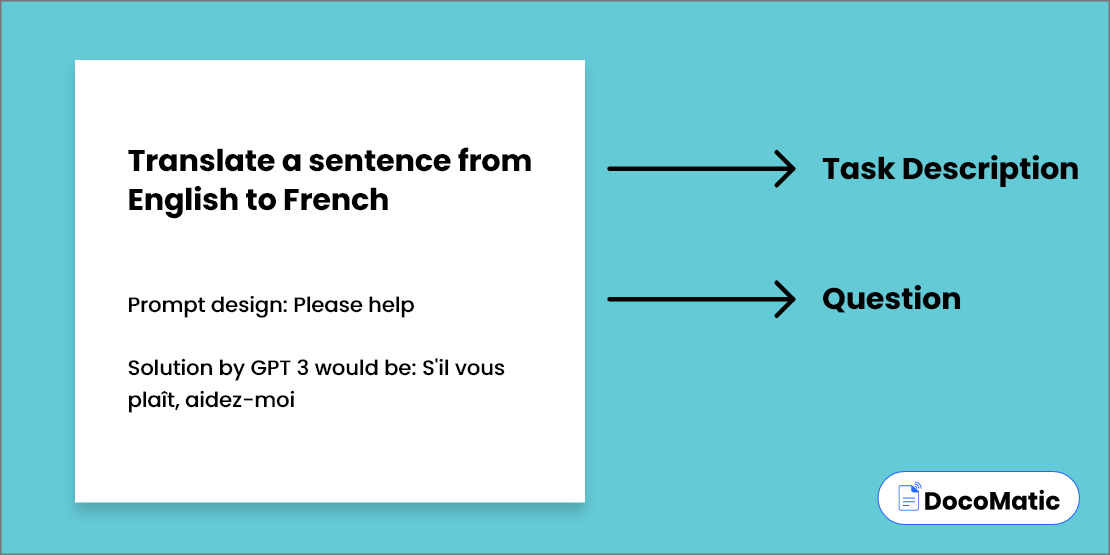

Zero-shot

We will explain the assignment to GPT-3 in Zero-shot before asking our query in the prompt. GPT-3 makes an effort to comprehend the description and provide the answer to the question.

- Assignment: The task assigned here is to “Translate a sentence from English to French”.

- Prompt design: Please help

- Solution by GPT 3 would be: S’il vous plaît, aidez-moi

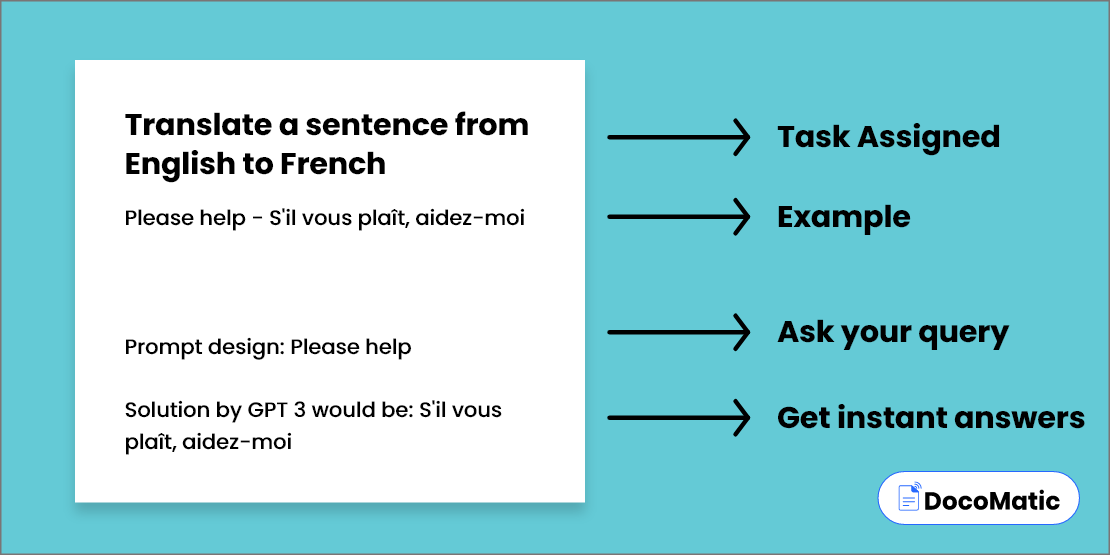

One shot

With One shot, you need to provide one example and the task description before asking any question or query to GPT-3.

- Assignment: The task assigned here is to “Translate a sentence from English to French”.

- One example: Please help – S’il vous plaît, aidez-moi

- Prompt design: Please help

- Solution by GPT 3 would be: S’il vous plaît, aidez-moi

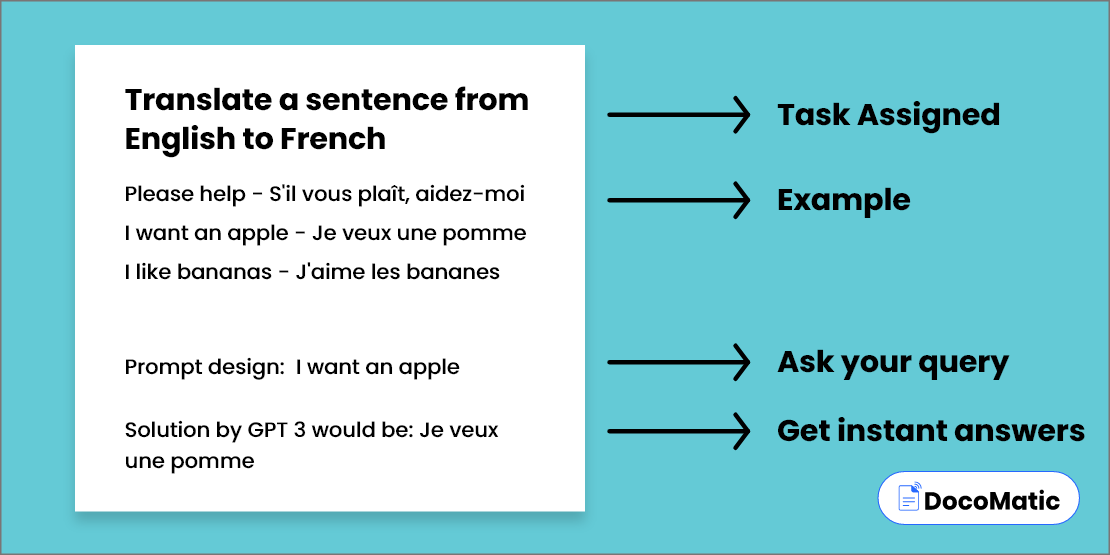

Few shots

Under a few shots, we’ll provide a number of samples and a work description before any questions.

- Assignment: The task assigned here is to “Translate a sentence from English to French”.

- One example: Please help – S’il vous plaît, aidez-moi

- Second example: I want an apple – Je veux une pomme

- Another example: I like bananas – J’aime les bananes

- Prompt design: I want an apple

- Solution by GPT 3 would be: Je veux une pomme

FAQs

A linear combination that predicts the future value based on past values. The term autoregression is a regression of the variable against itself.

When referring to language models, the term “meta-learning” refers to how quickly a model can adapt to or understand a task by using a broad range of skills and pattern recognition abilities that it gains throughout training.

Here are some pre-requirements to fine tune GPT-3:

- A powerful computer or cloud-based infrastructure with high-end graphics capable enough to handle massive amounts of data and computational power.

- A significant amount of training data in large text corpora can be preprocessed and fit into the model.

In order to prepare the training data, make sure that you remove the noise from the data. It further involves cleaning and tokenizing the data, converting it into a suitable file format, and dividing the data into the training and validation sets.

- First, customize the model in a way that better suits your specific task needs.

- After this, adjust the model parameters and hyperparameters of the model and train the datasets. By doing this, you can create a customized version of GPT-3 and get your task performed.

Some of the best practices available to train GPT-3 are

- Including a high-quality training set of data

- Selecting an appropriate architecture and hyperparameters

- Fine-tuning the model for specific tasks or applications

- Monitoring the performance throughout the training process.

There are various uses of GPT-3 that are mentioned here:

- Natural language generation for chatbots

- Automated content creation

- Language translation

- Sentiment analysis

- Question answering

- Information retrieving tasks.

Conclusion

After going through this detailed guide on how to train GPT-3, you must be convinced that GPT-3 is a remarkable innovation. It surpasses human tendencies and boosts the capabilities of language models to an incredible level. With its advanced capabilities, anyone can unleash the full potential of GPT-3 and take their NLP applications to the next level.

So, with GPT-3, the possibilities are endless. So try today and see what new exciting ideas and breakthroughs the future holds for you.