On the 13th of March, OpenAI released GPT-4, the last iteration of their Generative Pre-Trained Transformer system. In the last few months, previous models launched by OpenAI namely, GPT-3, followed by GPT 3.5 have gained a lot of recognition worldwide and have been accepted by everyone, from small-scale coders to unicorns like Microsoft. Now, this new model, GPT-4 is expected to prove itself as something beyond the capabilities of GPT-3.

OpenAI also released a video, “GPT-4 Developer Livestream” from its official YouTube channel in which they have explained some advanced features and capabilities of GPT-4. If we believe the rumors that are flowing around the internet, GPT-4 has been trained on 100 trillion parameters. Quite shocking, right? Well, check this blog to know all the new features of the newly released GPT-4, along with a brief about GPT-4 and their examples. So, let’s get started!

Table of Content

Background of GPT-4

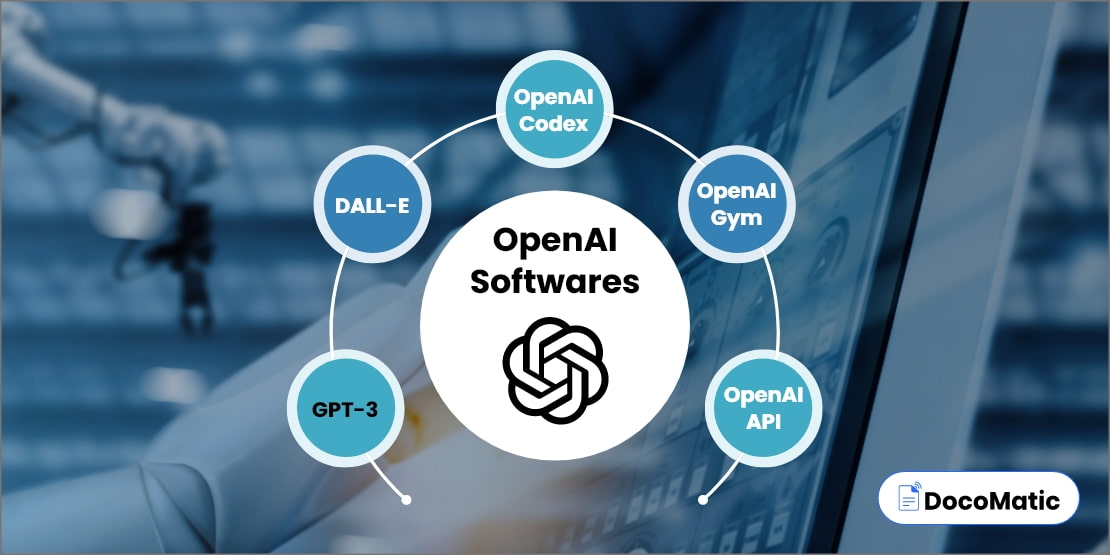

GPT, an abbreviation of Generative Pre-Trained Transformer, is a language model that uses deep learning and Natural Language Processing (NLP) techniques to generate human-like responses.

From GPT-1 to GPT-3, every iteration of the GPT model has significant features, and each one has improved with every new iteration. Refer to the below-given table to get an easy explanation of the background of different language models like its release date, number of parameters it was trained on, and key features & functionalities.

| Model | Release Date | Training data Size | Number of Parameters | Maximum Sequence Length | Key Features & Functionalities |

|---|---|---|---|---|---|

| GPT-1 | June, 2018 | 40 GB | 117 million | 1024 | Text completion, article summarization, question answering |

| GPT-2 | February, 2019 | 40 GB | 1.5 billion | 2048 | Natural language generation, text completion, translation, summarization |

| GPT-3 | June, 2020 | 570 GB | 175 billion | 2048 | Human-like text generation, advanced question answering, reasoning and inference |

The first iteration of GPT, GPT-1 was released in June 2018 and the latest model, GPT-4, the large language model, was released on 13 March 2023.

Top 3 Features of GPT-4

OpenAI tweeted and announced the release of GPT-4 as a “large multimodal model”. Multimodal models are types of machine learning models that are capable of integrating and processing multiple modalities such as text, images, videos, and audios. From this word only we can predict there’s more than just text inputs this time.

Talking about the features, there are 3 new features we will be seeing in GPT-4, that will enhance the capabilities of GPT-4 in comparison to other previous large language models:

1. Creativity

Creativeness is something in which GPT-4 has reached to new levels. You can command it to write screenplays, compose songs, do word-play, copy the user’s writing style, or anything more creative you can think of, and it will do it like a piece of cake.

In the demonstration video posted by OpenAI on its official YouTube channel, Greg Brockman, President and Co-Founder of OpenAI, showcased the features of GPT-4. He inputted an article and asked GPT-3.5 to summarize the article in one sentence but each word of the sentence should start with the letter “G”. But it really did not work.

On the other hand, he asked the same to GPT-4, and the model did it smoothly and meaningfully, outperforming GPT-3.5.

For example: If you got a story that needs to be converted into a poetry, just copy the text inside GPT-4, explain your requirements, and it will do the work for you.

2. Visual input

Until GPT-3 and previous other generative AI models, we were able to enter text inputs only. But now, along with text, GPT-4 is supportable to other multimedia and visual inputs like images, videos, and audio. GPT-4 with all the advanced capabilities, can generate code, process images, and interpret up to 26 languages.

This makes the model more leverageable. These are some of the very big features any AI tool can possess. This can make the model more versatile and adaptable to a broader range of input data.

While using ChatGPT, users were compelled to input text only. But GPT-4 has removed that boundness with this feature. You can upload a media file with a proper prompt and GPT-4 will answer in the best possible way. So, if you have a media file and a question ready to ask, GPT-4 is all set to answer your query.

For example: If you have a mathematical question written on a paper and you are finding an answer to it, just click the photo, upload it to GPT-4 and ask to solve it. No matter how complex or tough it is, the model will generate an answer in a few seconds.

3. Longer context

This feature is something that sets GPT-4 apart from its predecessor, GPT-3 and GPT-3.5. Previously users were able to input text but with a limitation of 3000 words only. But now, it can accept input up to 25,000 words.

This feature is useful for the users intending to input longer texts for better context. After inputting a large amount of data, you can summarize it, get quick insights, or do whatever you want.

This extended capacity to input text up to 25,000 words is GPT-4’s noteworthy contribution in the development of natural language processing. With this ability, it has the potential to significantly impact various use cases like document analysis, conversational AI, and content generation.

For example: If you want to get a summary of a long article, just insert its content, explain your expectations, and it will get it summarized for you accurately.

To get a deeper understanding of the ins and outs of the GPT-4, do watch this video given below.

FAQs

A large language model is an AI model, specifically designed to match the human level performance. Because of getting trained under massive datasets, GPT-4

GPT-4 is accessible for the ChatGPT Plus users and available as an API for developers to build applications and services. For API, users have to join the API waitlist.

Compared to GPT-3, GPT-4 is 82% prone to generate responses for disallowed content and 40% more likely to produce factual responses.

GPT-4 is the latest and next version of OpenAI’s GPT models. GPT-4 has come up with new and exciting multimodal capabilities like improved creativity, enabled visual input, and allowed longer input. This and many other new features make GPT-4 an exceptional new language model.

Currently, GPT-4 does not have any free version, unlike ChatGPT 3 or ChatGPT 3.5. But if you are a ChatGPT Plus user, you can access it within your subscription.

The cost of using GPT-4 is $20 per month for ChatGPT Plus users. However, for API access to the 8k model, OpenAI charges $0.03 for inputs and $0.06 for outputs per 1K tokens. Similarly, for API access to the 32k model, OpenAI charges $0.06 for inputs and $0.12 for outputs.

No, Bing Chat is only officially available on Microsoft’s Edge browser.

Conclusion

GPT-4 is an extremely awaited advancement in AI and natural language processing. Its recent release has brought a lot of excitement to the AI industry and other industries as well. According to OpenAI, they are constantly improving the model by gathering human feedback and having a team of experts working on AI safety and security.

Ultimately, it is impossible to overestimate the potential of GPT-4 to transform AI software development and natural language processing. Businesses and sectors should keep an eye on its further announcements while leveraging its capabilities and waiting for future advancements.